Here we are back at again, Round 3!! Last time we left off with using OpenHAB to automate the printing lights on a job start and stop. Now, we take things one step further, by automating more steps when the job stops and me just overall being lazy with how I get my files onto Octopi. So, without further a due, lets jump into our first task, syncing files with Rsync!

We are Syncing! We are Syncing!

Lets talk about how I run my print jobs. I start with two ways, grabbing files off Thingiverse.com (95% of the time…) or doing work in Blender and making something original. Both ways, I’ll end up with a STL file that I’ll throw into CURA. From there, CURA spits out the GCODE that is ready for upload. So I have a folder that holds all of my print files and that folder is made up of folders, that have folders, in folders with folder for folders. My OCD gets out of hand sometimes, any DBA would cringe at my normalization overkill… Anyway! The point is, with the messy folders on my desktop, not translating well on my Pi, you can see how things can get easily lost.

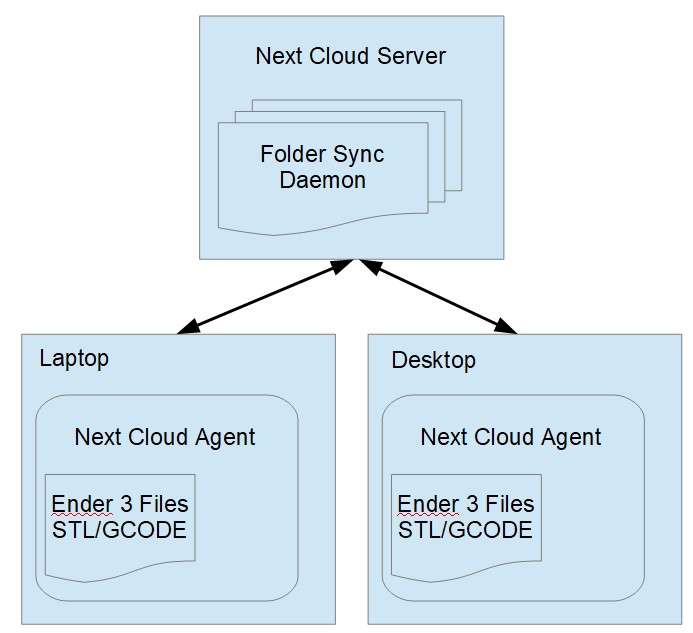

Syncing with Next Cloud

Lets take a quick step back and talk about the backbone. While I do all the STL GCODE stuff on my desktop, my goal is to keep things stored on my next cloud server to keep them available 24/7. To get this done, next cloud has an amazing Windows client that syncs your files in real time with your server. Think of it like Google Drives desktop client, or One Drive. Going this route also give me the chance to do blender/Thingiverse things from my laptop and still keep everything in sync on the Pi.

Cool, that was the easy part! Now we get into how we are going to sync Next Cloud to the Pi. Lets start with setting up our SSH keys!

SSSSSSH…. I’m RSyncing…

Heh, double pun because SSh and sssshh and rsy…. ok I’m done. The reason for setting up a SSH handshake is to make authentication between devices easier and more secure. Instead of sending passwords back and forth, the Pi and Server will share SSH public keys to authenticate.

I’m going to skim over some of the details of setting up SSH keys and doing the copy since the commands now a days are very easy to use and there a million tutorials like this one and this one to help you out. The 10,000 FT view is run SSH-KEYGEN (I just use all the defaults, nothing fancy), and run SSH-COPY-ID from the Pi.

ssh-copy-id -i ~/.ssh/id_rsa.pub frank@mynextcloudserverVerify the connection by just running SSH from the Pi against the server.

ssh frank@mynextcloudserver If you get to a login screen then yay it worked! If it asks for a password, double check the link to the tutorial I provided above.

Dude can we get to Rsync already…

Ok Ok… now we get to the meat of it, for real this time! Rsync! Rsync is a command line tool for copying files locally or remotely efficiently. How it does that is by sending over a manifest of file attributes. If the source file attributes match the destination file, then rsync will skip sending that file (unless you override that and send it anyway).

Here is an example of rsync: (Note! when you run this command with SSH Keys setup, no need for passwords! yay!)

rsync -av /source/folder/path/ username@remoteserver:/remote/folder/path/Tweaking this command a bit and going off of the rsync documentation and a shit ton of stack overflow 🙂 This command will bring over only the .gcode files from my Next Cloud server and throw it on Octoprint!

rsync -av --prune-empty-dirs --update --include="*/" --exclude="*.gcode.*" --include="*.gcode" --exclude="*" username@mynextcloud:/path/to/my/Ender3/folder/ /path/to/my/octoprint/folder/on/PI/When Rsync works too well…

Unfortunately, running the command above isn’t everything that needs to happen. Apparently, there is something in Octoprint that scans folder directories for changes. By doing that, it changes those file attributes. By doing that, Rsync sends the manifest and things everything needs to get send again. Then we end up with duplicates, bloated folders and running out of disk.

My current work around is to add some remove commands in the sync script to guarantee that whatever is synced over there is only one copy of it.

# Delete all files in the uploads folder

rm -r /path/to/my/octoprint/folder/on/PI/*

# Delete any hidden files in the folder too

rm -r /path/to/my/octoprint/folder/on/PI/.metadata.*

# Sync all files from next cloud down to Octoprint

rsync -av --prune-empty-dirs --update --include="*/" --exclude="*.gcode.*" --include="*.gcode" --exclude="*" username@mynextcloud:/path/to/my/Ender3/folder/ /path/to/my/octoprint/folder/on/PI/While its not the most elegant solution and it takes more time, at least it works 😛

Ok, now how do we run this script?

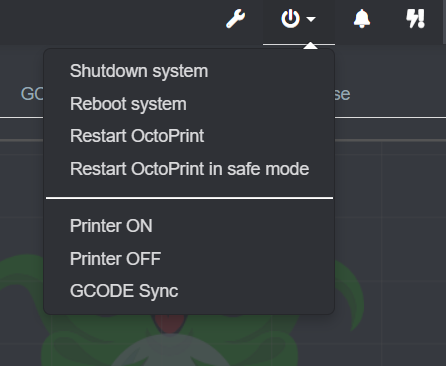

Glad you asked! Remember a few posts ago when I talked about setting up a power relay to turn my printer on and off from Octoprint? We are going add onto that menu and underneath the power options just add an entry for GCODE SYNC. Below is a snippet of my config.yaml file.

system:

actions:

- action: poweron

command: python ~/path/to/printerpower.py ON

confirm: Turn ON Printer?

name: Printer ON

- action: poweroff

command: python ~/path/to/printerpower.py OFF

confirm: Turn OFF Printer?

name: Printer OFF

- action: GCODE Sync

command: ~/path/to/gcode-sync.sh

confirm: Sync GCODE files from Cloud?

name: GCODE SyncWith the entry added and Octoprint restarted we now have a new menu item. One click and voila! Any gcode files I make on desktop or laptop in my ender 3 folder will get pushed to Octoprint when I hit Gcode Sync 🙂

But wait! There is More!!

Since I was in the Rsync neighborhood, I figured I would do one more thing with it. We’ve used it to throw files onto Octoprint, but what about pulling files off? Like… when a print job is finished, pushing the time-lapse file up to next cloud? Lets throw some enhancements in our print complete script shall we?

The rsync command this time around will be less complicated than the one already mentioned. However, there are a few caveats we need to consider. I don’t want to upload failed print job time-lapses, so I need to filter those files out. Also, I need to run a command on the next cloud server to notify it that there are new files in the Time-lapse folder I need it to add to the database. I could wait for the cron job to come around and do that for me but I’m impatient…

Oh and one last thing, related to my last post about bailing on IFTTT, I don’t get notifications for when my print job finished. I’m using a comcast relay to send notifications. I use the mail command on the Pi to do this.

Great, so with all things considered, our script ends up looking like this!

# Send the command to turn off the printer lights

curl -X POST --header "Content-Type: text/plain" --header "Accept: application/json" -d "OFF" "http://myOpenHab/PrinterLights"

# Send the email to me letting me know the print job is done

echo "Print job complete!" | mail -s "Print Complete" -r someemail@sarnelli3d.com someotheremail@gmail.com

# Delete any files called *-fail.* in the name

rm -r /mypi/octopi/timelapse/*-fail.mp4

rm -r /mypi/octopi/timelapse/*-fail.mpg

# Sync files to next cloud

rsync -av --protect-args --exclude='*/' /mypi/octopi/timelapse/ "username@nextcloudserver:/path/to/Time Lapses/"

# remove all files from timelapse

rm -r /home/pi/.octoprint/timelapse/*

# run scan now on next cloud so it shows up

ssh username@nextcloudserver "cd '/path/to/my/working/folder/' && php occ files:scan --path='path/to/my/Time Lapses/'"So Rsync or Rswim?

Ok, it wasn’t this bad, but there were a few moments where it was more rstink than rsync. Man, there are a ton of puns you can make with rsync… I might do another trilogy with something else I’ve been playing around with. Maybe like a Starwars thing and do multiple trilogy’s. Polygies, Nth-o-gies? Anyway, thanks for coming by, stay tuned for more! Keep calm and keep automating!!! 🙂